Time-Evidence-Fusion-Network

Time Evidence Fusion Network (TEFN):

Multi-source View in Long-Term Time Series Forecasting

Repo Status:

Implementation:

Updates

🚩 News (2024.05.14) Compatible with MPS backend, TEFN can be trained by

Overview

This is the official code implementation project for paper “Time Evidence Fusion Network: Multi-source View in

Long-Term Time Series Forecasting”. The code implementation refers

to

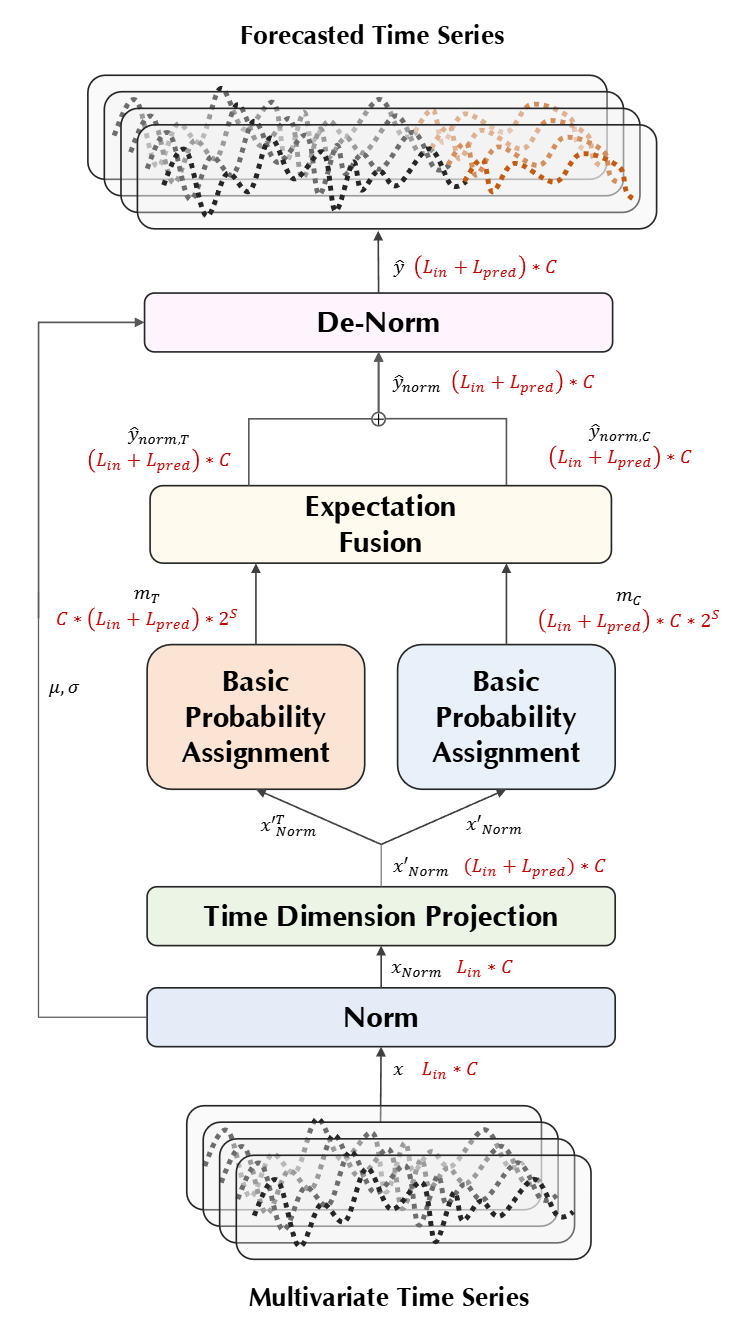

The Time Evidence Fusion Network (TEFN) is a groundbreaking deep learning model designed for long-term time series

forecasting. It integrates the principles of information fusion and evidence theory to achieve superior performance in

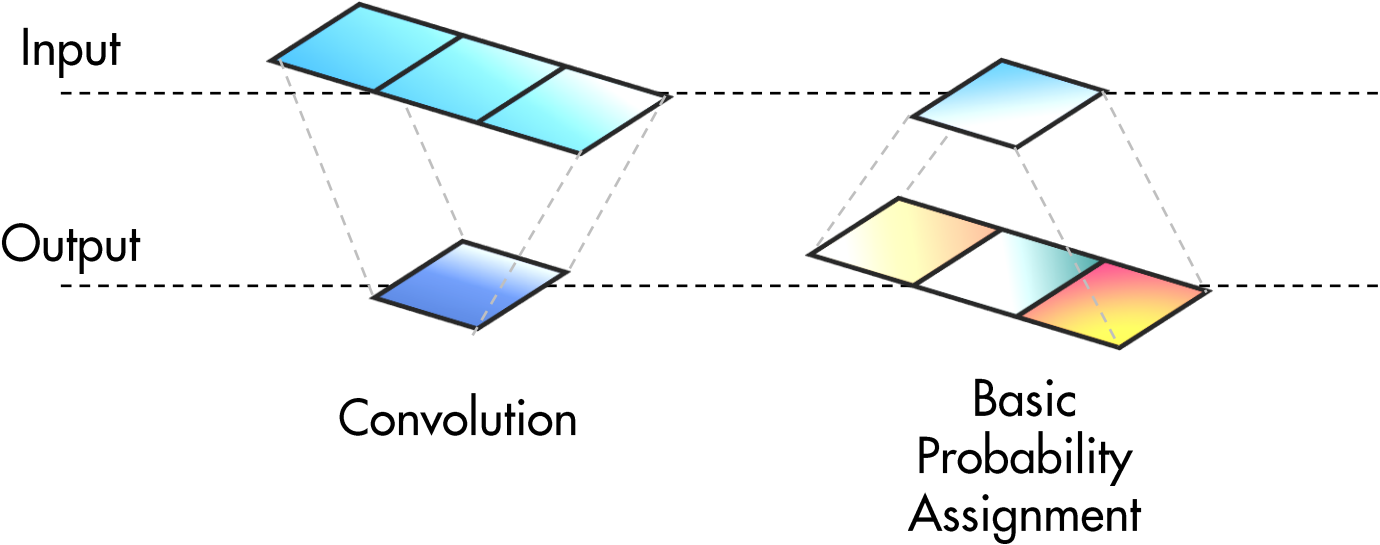

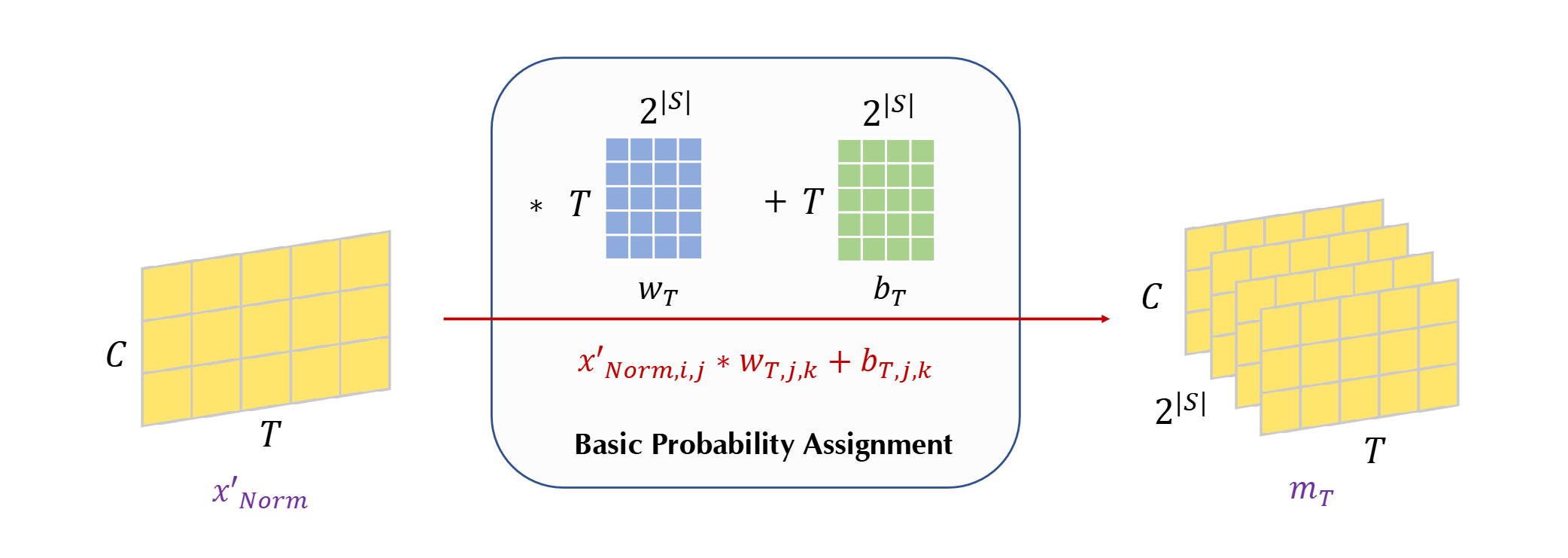

real-world applications where timely predictions are crucial. TEFN introduces the Basic Probability Assignment (BPA)

Module, leveraging fuzzy theory, and the Time Evidence Fusion Network to enhance prediction accuracy, stability, and

interpretability.

The Time Evidence Fusion Network (TEFN) is a groundbreaking deep learning model designed for long-term time series

forecasting. It integrates the principles of information fusion and evidence theory to achieve superior performance in

real-world applications where timely predictions are crucial. TEFN introduces the Basic Probability Assignment (BPA)

Module, leveraging fuzzy theory, and the Time Evidence Fusion Network to enhance prediction accuracy, stability, and

interpretability.

Key Features

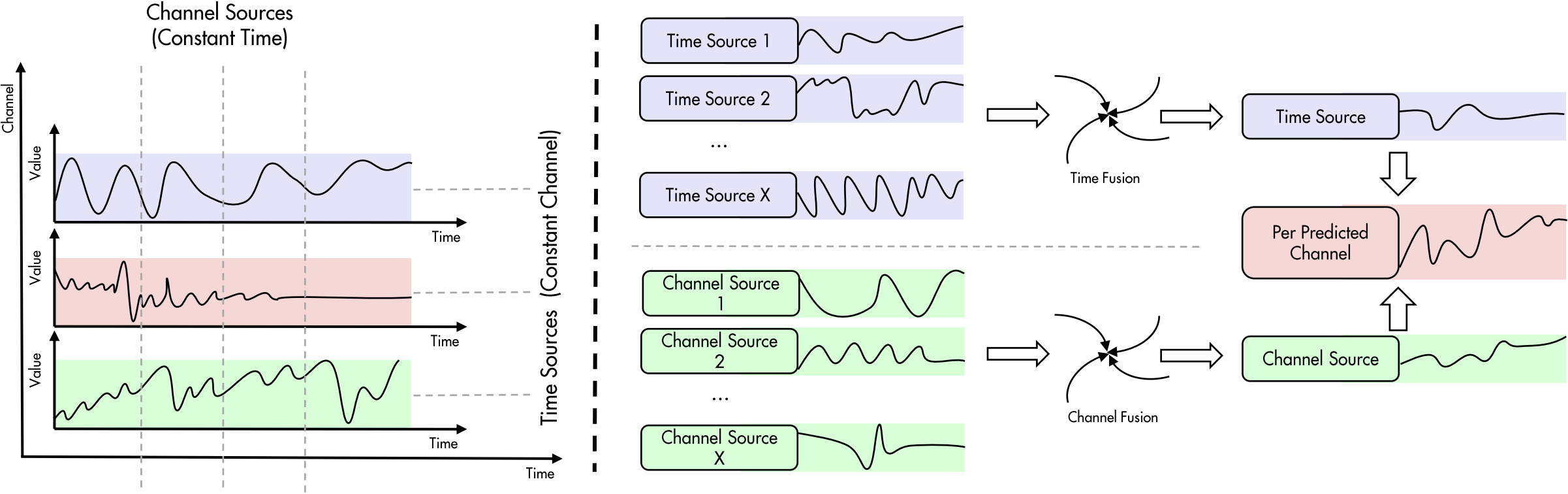

- Information Fusion Perspective: TEFN addresses time series forecasting from a unique angle, focusing on the fusion

of multi-source information to boost prediction accuracy.

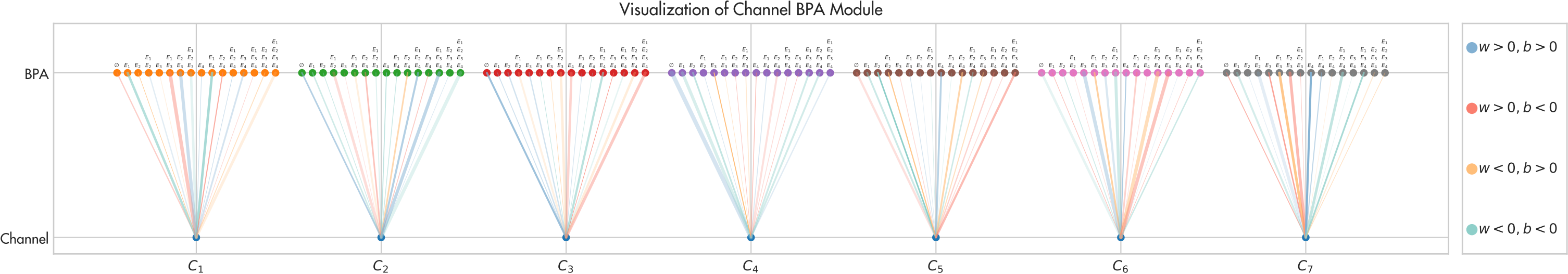

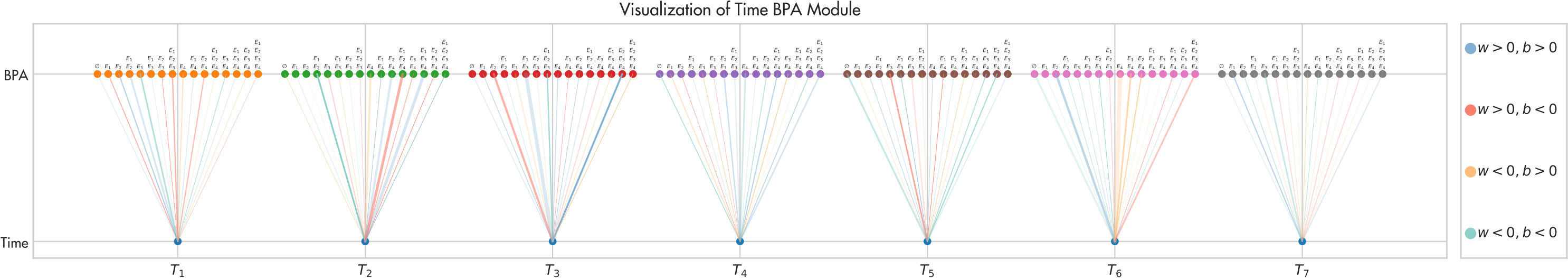

- BPA Module: At its core, TEFN incorporates a BPA Module that maps diverse information sources to probability

distributions related to the target outcome. This module exploits the interpretability of evidence theory, using fuzzy

membership functions to represent uncertainty in predictions.

- Interpretability: Due to its roots in fuzzy logic, TEFN provides clear insights into the decision-making process,

enhancing model explainability.

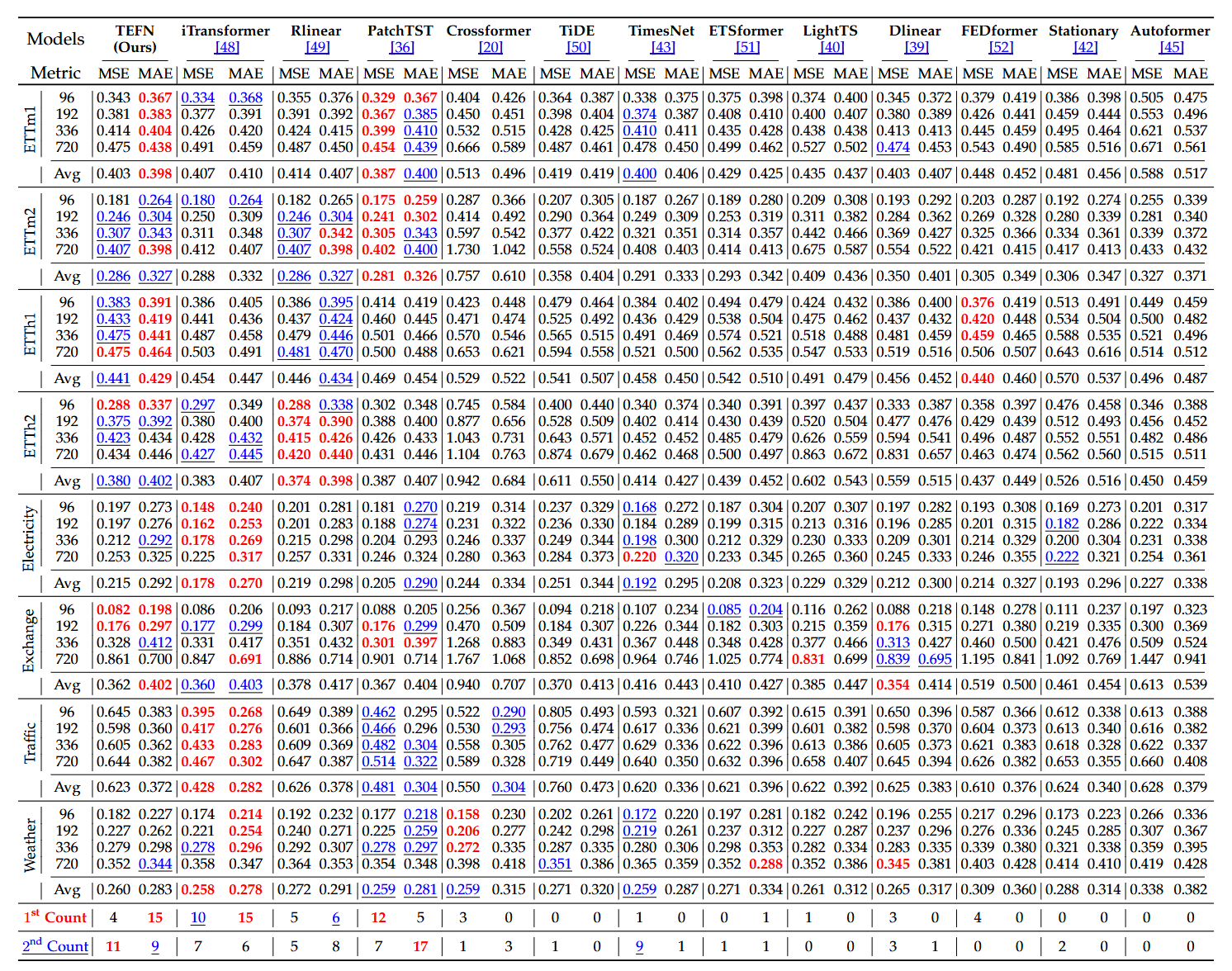

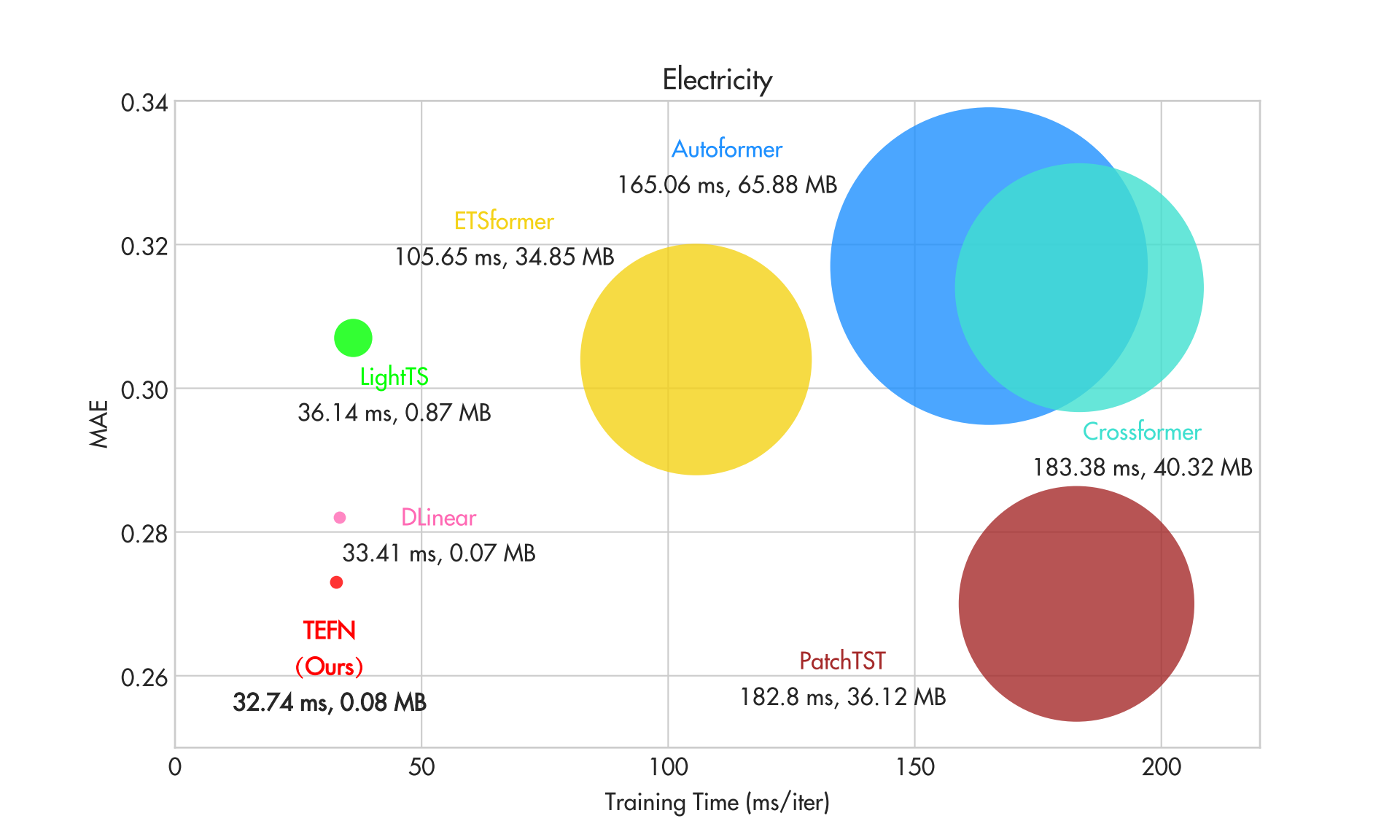

- State-of-the-Art Performance: TEFN demonstrates competitive results, with prediction errors comparable to leading

models like PatchTST, while maintaining high efficiency and requiring fewer parameters than complex models such as

Dlinear.

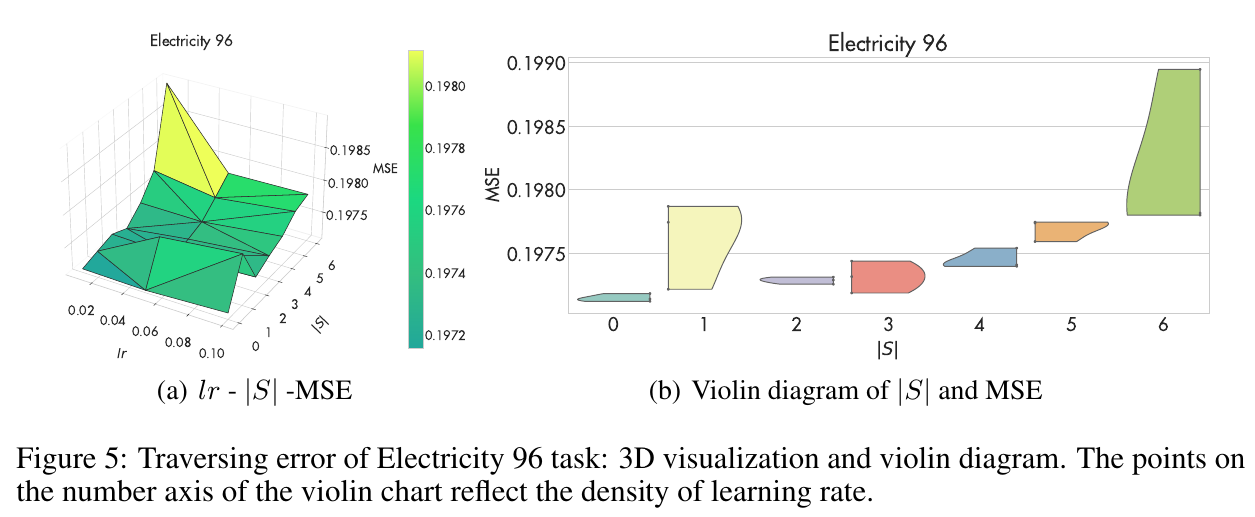

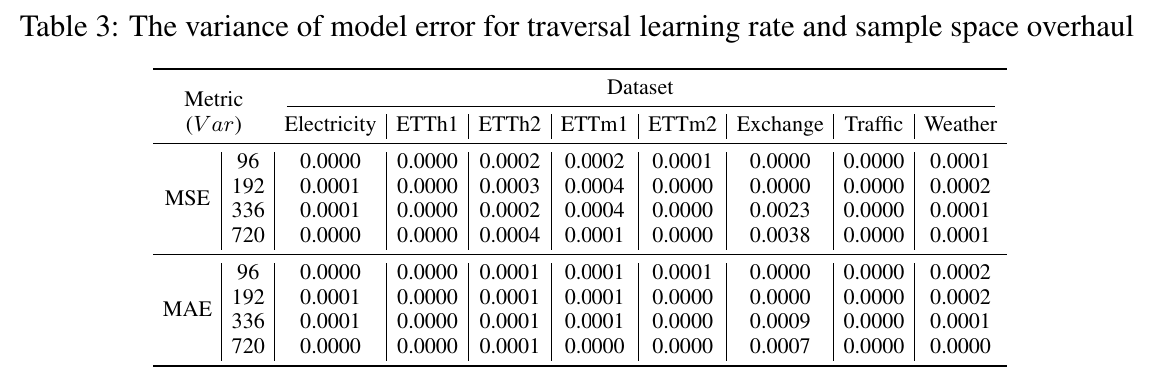

- Robustness and Stability: The model showcases resilience to hyperparameter tuning, exhibiting minimal fluctuations

even under random selections, ensuring consistent performance across various settings.

- Efficiency: With optimized training times and a compact model footprint, TEFN is particularly suitable for

resource-constrained environments.

Getting Started

Requirements

Python >= 3.6

PyTorch >= 1.7.0

Other dependencies listed in

requirements.txt

Installation

Clone the repository:

git clone https://github.com/ztxtech/Time-Evidence-Fusion-Network.git

cd Time-Evidence-Fusion-Network

pip install -r requirements.txt

Usage

Download Dataset

You can obtain datasets

from

./dataset.

Load Config

- Modify the specific configuration file in

./run_config.py.

config_path = '{your chosen config file path}'

- Run

./run_config.pydirectly.

python run_config.py

Switching Running Devices

- Find required configuration file

*.jsonin./configs. - Modify

*.jsonfile.

{

# ...

# Nvidia CUDA Device {0}

# 'gpu': 0

# Apple MPS Device

# 'gpu': 'mps'

# ...

}

Other Operations

Other related operations refer

to

Citation

If you find TEFN useful in your research, please cite our work as per the citation.

@misc{TEFN,

title={Time Evidence Fusion Network: Multi-source View in Long-Term Time Series Forecasting},

author={Tianxiang Zhan and Yuanpeng He and Zhen Li and Yong Deng},

year={2024},

journal={arXiv}

}

Acknowledgement

We appreciate the following GitHub repos a lot for their valuable code and efforts.

From Time Series Library

This library is constructed based on the following repos:

- Forecasting: https://github.com/thuml/Autoformer.

All the experiment datasets are public, and we obtain them from the following links:

-

Long-term Forecasting and Imputation: https://github.com/thuml/Autoformer.

-

Short-term Forecasting: https://github.com/ServiceNow/N-BEATS.

Contact

If you have any questions or suggestions, feel free to contact:

- (Primary) Tianxiang Zhan (ztxtech@std.uestc.edu.cn)

- Yuanpeng He (heyuanpeng@stu.pku.edu.cn)

Or describe it in Issues.